Not All Runtime Code Is Written By Programmers

As a result of my personal programming experience and languages I've been using for years, I've always been mentally stuck to the fact that all runtime code (or at least the most significant parts) in a program is created by a software engineer itself, and, well, it's still true for almost all code written. Recently, I've been re-reading a "Pragmatic Programmer" classic book by David Thomas and Andrew Hunt and noticed the "Code Generation" chapter which was not that significant to me years before.

Re-reading it once more caused me to think more about this in a bit more comprehensive manner: hey, I've been using a lot of compile-time programming working with Nim for a few years ago, and how about others?

I've made some research on the state of metaprogramming in other languages, and things are pretty much better than a few years before. The tools like C#.NEXT and Scala 3 macros have appeared, and these are mainstream programming languages working with mainstream platforms, namely .NET and JVM. Several years ago only Nim, D, and C++ offered decent means of compile-time programming.

This essay is a humble endeavor to codify this knowledge, especially in light of recent developments in machine learning that has given us the fascinating GitHub Copilot and ChatGPT.

Runtime Code Definition

Firstly, let me clarify that I use the notion of runtime code here intentionally.

Here I try to be as precise in terminology as possible: a code that is mapped directly into either bytecode or native machine instructions excluding the optimizer code modification effects.

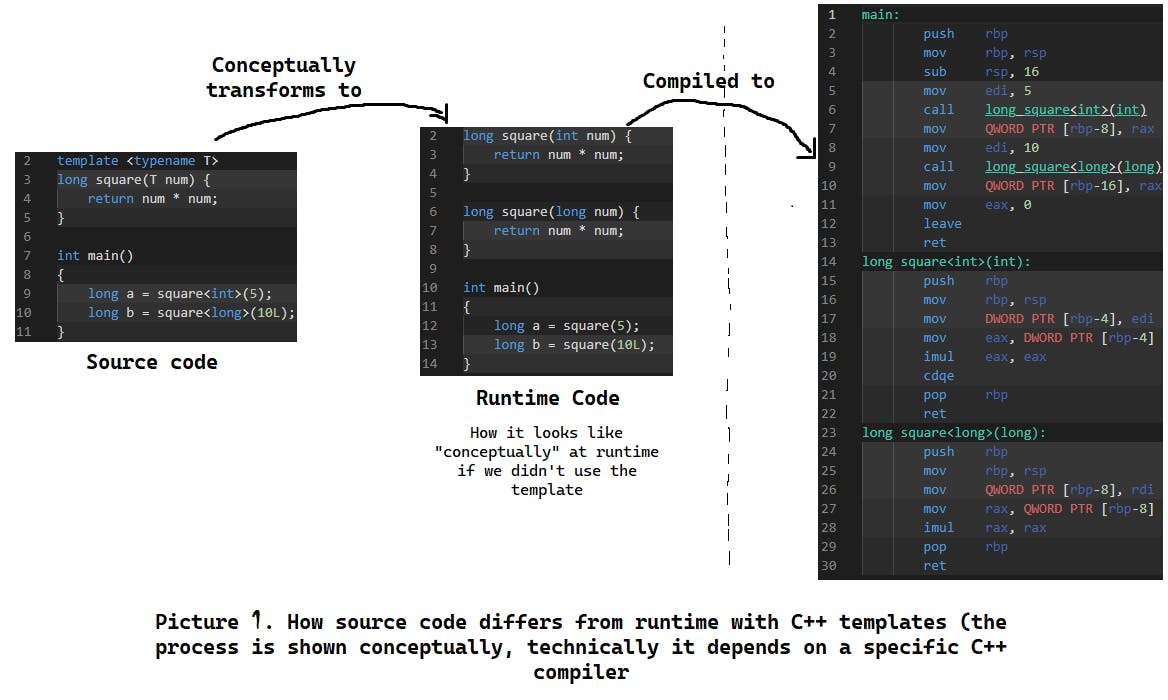

Here is a picture below that demonstrates why I have used the "mapped directly" here. It shows the difference between the source code and the actual code that is executed in runtime after C++ templates were resolved into concrete implementations (also, if we hadn't used the square template all that code would be eliminated by the optimizer or even not generated at all).

Talking in terms of modern compilers, we're talking about a state of annotated syntax tree we receive right before the intermediate representation generation phase.

Most commonly we write runtime code manually, in rare cases we use code generation at a build phase or some simple in-source tools like C preprocessor directives or simple forms of code executed at compile-time, mainly, generics (Java, C++), variadic templates for unfolding uniform operations (C++).

Metaprogramming Classification

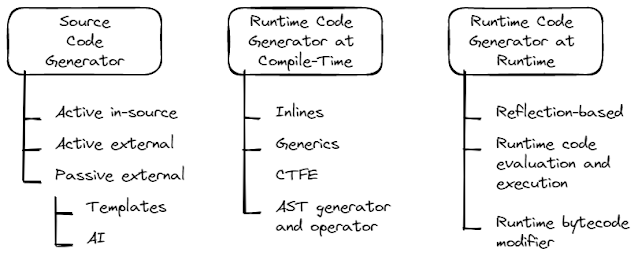

So what are the overall possibilities of metaprogramming as a whole? Based on my experience and active/passive source code generator differentiation from the "Pragmatic Programmer" book [1], I have systematized metaprogramming into the next comprehensive hierarchy (pic. 2):

Let us look closer at each of these approaches.

Source Code Generator

This kind of tool is used to generate human-readable source code that is either a standalone file(s) or a chunk inserted in a predefined place inside existing code files (modules/units, depending on the programming language).

Depending on when it is used, it can be either active or passive, and depending on where it is used, it can be either in-source or external.

Active In-Source code generator

Active in-source code generators perform insertion of code during compilation based on some context-free logic thus being not directly linked to the semantics of code where it is called from (for example, C pre-processor directives are inserted based on some compiler definition).

Such kind of code generation is not verified by a compiler in any way, only the generated code itself.

Active code generators act each time the code is compiled.

Implemented by: C Pre-processor directives, go generate comment annotation.

Active External code generator

These generators create plain source code not based on existing code context but rather act as a configurable external tool that is run each time the code is compiled. The process is very similar to active in-source generators.

Implemented by: external go generate a call, SWIG code wrapper generator.

Passive External code generator

Passive code generators are usually run once, analyzed by a programmer, and then inserted into the code base as if it was written manually.

Passive external code generators can be divided into two quite different categories:

- Template-basedThis approach is used for the automated generation of boilerplate code when starting a new program or component. Each framework has such kind of tool.Implemented by: IDE projects templates, and custom framework-dependent project structure generation tools.

- AI-basedThis is the newest existing way of code generation. Such kind of tool acts as an intelligent agent that generates source code taking a task and requirements written in either a natural language as if it is done by humans, or by analyzing current code context directly in the IDE.Implemented by: ChatGPT, and GitHub Co-Pilot.

Runtime Code Generator at Compile-Time

So we're done with the generators that create human-readable source code. Another kind of generator creates runtime code bypassing its human-readable aspect. Thus these are either instructions written in a programming language on what real executed code is to be generated at compile-time.

Unlike plain source code generators, runtime code generators are parts of the programming language themselves, they are processed and verified by a compiler during the compilation phase.

These kinds of tools differ mainly by their capabilities thus they are completely different in usage complexity.

Inlines

Inlining is the simplest code-generation mechanism: the callable body is injected directly at the call place.

Here I mean only intentional inlining, not inlining rooted in other causes like C++ inline functions which are rather compiler's optimizer hints than a metaprogramming technique.

Inlining allows us to optimize code execution eliminating wastes on standard call mechanism.

Implemented with: Nim templates, and Scala's inlining.

Generics

This special mechanism is intended to allow us to implement an algorithm once for different data types by passing the types as parameters (picture 1 demonstrates a generic function in C++ that is implemented via the templates mechanism).

Usually, generics are implemented by simply copying the implementation body over different data types (picture 1). Thus at runtime, we have several copies of runtime code with concrete data types we call generics with.

Generics allow us to implement algorithms once for different data types thus eliminating work time wastes and elegantly following the don't-repeat-yourself principle.

Implemented in Java, C#, Nim, C++, Rust, and many other languages as a first-class language feature.

Compile-Time Function Execution (CTFE)

This abbreviation is used in D programming language but is also called compile-time function evaluation or general constant expressions in other communities and possibly has also other notions I'm not aware of.

Anyway, at the core of this kind of metaprogramming, we have a restricted set of programming language features that can be executed inside the function during compile-time.

Compile-time function execution allows us to optimize runtime by pre-calculating constant values at compile-time (for example trigonometric function tables of string hashes) or even generate raw source code strings and pass them for further compilation.

The CTFE has really become a trend for modern programming languages. For example, Rust implements this technique via constant expressions and constant functions, and Zig has comptime evaluation as well.

Implemented by: D language compile-time functions, Nim static statement, C++ constexpr and consteval, Rust constant expressions and constant functions, Zig comptime evaluation.

AST generator and operator

It is the richest and most complex way of metaprogramming, and only a few programming languages support it.

The most popular term for this phenomenon is macros. At the same time, the term macros also have many other different meanings in other programming languages. So I have come up with an AST generator and operator to eliminate any confusion.

Allows to perform AST (abstract syntax tree) manipulations: creation, modification, deletion thus allowing the generation of any functionality with a code. This allows the creation of DSLs (domain-specific languages) or even adding new features that are "performance-free" and visible to the programmer as a language-level construct

The most prominent usage of the AST generator and operator approach is Nim language's async/await implementation which is done in the standard library without modification of the language itself. Also, there are some interesting use cases where Nim code is translated into GLSL shaders during compile-time. And that is where the real power of metaprogramming is located.

While being armed with AST generators and operators, metaprogramming itself seems quite an unexplored part of programming.

Implemented by Nim macros, Scala macros, and C#.NEXT.

Runtime Code Generator at Runtime

That was the last kind of compile-time metaprogramming. To be comprehensive, further chapters of this essay are devoted to metaprogramming in interpreted languages though it is not directly related to the title.

All these tools are heavily used now, especially those based on introspection and reflection (both for code analysis and modifications).

Reflection-based Code Modifier

This is possibly the most hated technique.

At its core, it is implemented as alternating code behavior in runtime (like adding or removing object methods at runtime). Most interpreted programming languages support this kind of functionality, but such an approach is usually not encouraged by the community, and thus not widely used.

Sometimes this approach is also called "monkey patching".

Implemented by: interpreted programming languages, look at Python gevent library for reference.

Runtime evaluation and execution

This kind of metaprogramming is allowed by basically all interpreted programming languages I know.

It is implemented by generating or digesting external plain source code to the program and executing it at runtime. This allows us to build nice things like software that is updated without restart, plug-in systems, and so on.

Implemented by: many high-level interpreted languages such as Python and JavaScript allow it out-of-the-box.

Direct Runtime bytecode modifier

This is considered the most barbaric way to deal with your program at runtime, but still, it is technically possible and some programmers use this approach in their programs mainly for optimization.

Simply speaking, it is done by runtime byte-code decompilation, modification, and recompilation. I won't be deep in details but you can easily find deep articles on the Internet on how programmers abuse Python byte code at runtime.

Implemented by: Python dis.

AST Generator and Operator

This is what I think can be a future of metaprogramming.

Except for the AGO way of metaprogramming, the others are more or less stable and have widely known usage patterns in different programming languages. This one is different as it requires seriously designed language support. This approach started to be supported by a wider range of programming languages in only a few last years and is still not explored enough.

But either way, there are signs in favor of this most capable kind of metaprogramming.

Fresh Developments

Most of these tools entered their major first versions less than five years ago. Since then there are 3 production-ready AoT compiled programming languages that operate at AST level with corresponding means (I would be much grateful to readers for uncovering more such instruments for me if those exist so I could update the table), and they are available on all major platforms:

| Language | Mean | Platform |

| Nim | macros | Native / Web |

| Scala | macros | JVM / Native |

| C# | .NEXT | .NET |

This shows raising interest in this kind of metaprogramming because only ten years ago there were zero programming languages supporting programming as a transparent way to automate working with the program's AST.

Here we can draw parallels between metaprogramming and graphics programming, which was based on a fixed function pipeline at first, and then transitioned to a programmable graphics pipeline based on shaders.

The world of business is now fast as hell

This requires rolling out new feature-rich releases faster basically everywhere. Even the most stable things in our world like Linux Kernel, and Web Browsers are on that path for years. This makes software engineers find ways to automate solutions creation and speed up writing clean code, so we invent things like procedural or AI-based code generation.

While AI-based generation already proved to be helpful as a programmer's assistant, its behavior is quite stochastic, while procedural code generation is strictly determined, thus having similar differences between active and passive code generators in the aspect of outcome reliability and usage time.

Compile time is more performance friendly

The fact that you can do something at compile time means that this would not be executed in runtime which means fewer bugs and more performance. If you do not strain with it, you will not get dramatic growth of build time.

Game programmers that usually work with C++, use constant expressions for calculating string hashes for years.

Still, The Future of AGOs Is Still Nebulous

Here are some thoughts about why the current state of metaprogramming development is what it is.

Bad Readability - the root cause of all evil

Languages with powerful reflection and introspection tools and their communities heavily use metaprogramming because they operate on the same level as runtime code.

This means that code is executed/generated as an interpreter gets to it. Thus generated code and code that generates code are executed at the same time and space. So when you read the code it is naturally executed as you follow the control flow.

This is very different for AOT-compiled languages. Code that operates at compile-time is eliminated in the executable file. Moreover, when looking at the module in your favorite IDE you don't see the executed code at runtime, you have to imagine it will eventually be there :) It is still hard to embrace, hard to use and requires a richer toolset for comfortable use.

Additional Debugging Phase

The second problem is an outcome of the first one. We have another layer/phase of code execution before runtime, which means you have one additional stage of debugging.

And this is not quite simple because debugging compile-time code means reading hierarchical AST structures in terminal or generated code (for example, Nim language uses C as an intermediate representation which is further compiled into native code). For me, it takes much time to spend inside generated C files that are much less readable than those written by humans, in case I've made an error.

But other languages are compiled directly into native code, so you don't have this opportunity.

Additional Testing Phase

Where we have to debug, we have to test. I don't have an established argumentative vision on how it is even can be done for compile-time programming, but I'm pretty sure that in this case we just must not include testing as a processual part of development.

This is because of the nature of compile-time coding results. The compile-time execution result is a code itself, thus we work with it in a fully controllable environment. Code generation usage itself means activating all usage scenarios. So, if it works, it is bug-free.

On the other hand, with each new usage an error can happen, so we cannot be fully sure about our AGO code.

My Viewpoint For Future

I've been doing metaprogramming for some time. It has a rich potential for everyday programmer's job automation and optimization in various aspects: content generation, graphics programming, testing, optimization, etc.

Every year more and more programming languages support metaprogramming so supporting CTFE is rather a rule for new developments than an exclusion like it was only a few years ago.

Not that many languages support direct manipulations with AST as of this day, and it is okay because there is still only a vague understanding of its power and usability.

What's most interesting about that kind of metaprogramming is that everything depends on your imagination and how far it can reach.

I encourage you to try. Metaprogramming is an interesting experience that delivers a lot of fun for one using it and can bring a lot of positive unexpected outcomes.

References

[1]. D. Thomas, A. Hunt "Pragmatic Programmer, 20th-anniversary edition", 2019.

Special Thanks

To Reddit user catcat202X for pointing out Zig and Rust CTFE, actually the freshest languages out there.

Keep a amazing blog i like it Technology Content

ReplyDelete